This article was first published in 2018 and updated in 2021.

As both Apple’s App Store and the Google Play Store have grown, it has become increasingly important to stand out and ensure you are doing everything within your power to maximize your engagement opportunities in both stores.

A great way to do so is through conducting mobile app A/B testing. It ensures that you’re constantly improving, and it’s a very tangible and methodical way to improve conversion rates and increase results generally.

What is Mobile A/B testing?

A/B testing simply means that you create two versions of something where each one is slightly different and test them against each other with an audience sample. In the mobile marketing world, it can refer to testing two versions of an ad, app store screenshots, or in-app experience, to name just a few. From the test results, you can determine which performs best according to your KPIs.

The easiest way to test versions against each other is by ensuring there’s only one single difference between each, so you can attribute any change in results to that aspect. Just to give you an amuse bouche, a difference can be in the text (body or headline), banner image, target audience, and more.

Each version is displayed to a different audience, and their results help us determine which ad works better. Some sort of a digital focus group, if you will. Just to make sure it’s clear, we’ll give an example:

You have an ad; however, you’re not sure what the CTA button should say. You and your colleagues are debating between two versions. So what do you do? Make both, of course. Then you divide your audience in half and send a different ad to each, and see which version is more engaging and comes up with better results.

Mobile App A/B Testing Best Practice

There are a number of ways to go about mobile app A/B testing, some of which are better than others. We’d like to encourage you to take an informed, data-driven approach to testing.

For example, let’s say you have a ride-hailing app. A bad test would be to say, “let’s change the first screenshot and see what happens”.

Compare this to an effective test. To begin with, you will gather data; what has user feedback been like? What changes have you made in the past, and what was their impact? You would then test an informed assumption: “A screenshot showing our app’s dashboard is likely to convert better than one showing happy users”.

A good test should run for at least seven days and up to fourteen days (you’d want to see the impact of weekends, for example). Starting at the beginning of the week means you can run full weeks and cover at least two business cycles. This is important because weekday and weekend traffic often differs. There’s usually a warm up period that needs to be accounted for before you can start relying on the data, too.

All data should be measured and carefully considered. Testing should be done in consistent environments, so avoid a test during times where results are likely to be skewed (for example testing a soccer app during the World Cup).

It’s also super important not to play with the creatives or try and optimize for lower cost installs during the test, as tempting as this may be. Changing these will add additional factors to the test, which will in turn result in compromised data and often the incorrect conclusions.

What is Statistical Significance?

Throughout this article, you’ll find numerous references to statistical significance, so we thought it would be a good idea to introduce you to the concept.

Statistical significance is identifying what the probability of the test results is to be true to the overall population. It shows how likely it is that the difference between your experiment’s control version and test version isn’t due to error or random chance. For example, if you run a test with a 95% significance level, you can be 95% confident that the differences are real.

Statistical significance is used to better understand when tests or variations need to stop running. Tests or variations aren’t usually stopped before they reach significance unless other considerations are at play, e.g. too many variations, time or budget constraints, etc.

The preliminary work to an A/B test involves calculating the amount of samples needed per variation in order to achieve statistical significance. This relates to the experiment’s accuracy. The more samples there are, the more statistically significant the test is likely to be.

If the difference between the best performing and worst performing variations are not statistically significant at the end of the test, that means the test was inconclusive.

How to A/B Test?

There are a few guidelines to do it right, in a way that will bring concrete results, which can give us essential information to guide future mobile app A/B testing strategy as well.

One Variable

The test versions should be completely similar, with only one difference. This is perhaps the most important aspect of the testing. If you test both a different color and text, how will you know which made the difference? Did version A convert better than version B because the text was more persuasive, or because the picture caught attention?

Also, more variations means less clicks per variation (it divides the total amount of traffic we bring to each version more), thus making the experiment harder to conclude. Less traffic to each variation provides less statistical significance.

ABCD Test

Testing only one element at a time could mean that if you want to test screenshots with a different background and different text copy, you must make two variations with the same background and different copy, and two more variations with the same copy and different background.

The first variation is always the reference point, which in many cases when it comes to screenshots, is the currently active store variation. For example:

- Variation A: Current (live on the store)

- Variation B: New Design (same copy as variation A)

- Variation C: New Copy (same design as variation A)

- Variation D: New Design + New Copy

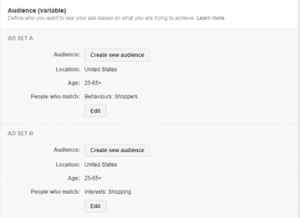

Audience

An audience size of only five people is not sufficient to determine what works and what not. For such a test, you need to reach a large enough audience to achieve good results. However, when doing so, make sure not to overlap with another campaign that might be live. If a person sees two different ads for the same app, the result might be biased.

You need a significant amount of traffic to each version to reach statistical significance, as touched upon above.

Time Frame

The general conception for a test’s time frame is not fewer than seven days, and up to fourteen days. This is enough time to assess the numbers across weekdays and weekends, where traffic differs, and leaves enough time for the test to warm up.

However, remember that this is only a test and not the actual campaign, so you’d want to end it in time so you can get to the real deal.

Budget

Every aspect of such a test on social networks or the app stores comes with its cost. On the one hand, it’s recommended not to spare any expenses to achieve the best results. However, remember it’s just a test. Save money for the real campaign you intend to run after you have the results of your A/B test. The minimum amount for a split test on Facebook is $800, so you can start with that and control your expenses.

Strategies

Almost every single part of your app store presence can be tested and improved upon. Below we’ve highlighted some key areas to look at first when it comes to the ultimate app store testing strategy.

1. Keywords

While keywords pack the least amount of ASO “power” (when compared to factors such as title and subtitle on iOS, or title and short description on Android), they are definitely an area in which ASO performance can be lifted. And when it comes to testing keywords, not only should individual keywords be tested, but also the interplay between keywords and images. Important questions to ask at this stage when testing your keyword strategy are:

- Should we focus on broader keywords or very narrow keywords for specific niches?

- Should we use more provocative keywords?

2. App Title & Subtitle

The app name and description don’t live in isolation. They have to be an integral part of the keyword and creative strategy. The big question is: should you go for the most competitive terms with the most traffic, or go for long-tail keywords that will get you ranked easier for these niches?

3. Icon

The app icon is extra critical because depending on how a user got to your app, it might be the only creative they see before deciding to go ahead and explore further. Testing here should include:

- Colors

- Images vs sketches vs logos

- On Google Play – should the background be round or in another shape?

- Design (e.g. “flat” vs 3D)

4. Creatives

It’s interesting to note that even the biggest apps in the world are testing and changing around their creative assets four to five times every month.

When it comes to testing your creatives, it includes everything from:

- Images vs video (e.g. using just screenshots or screenshots in addition to an app store preview video)

- Aspect ratios (resolutions and sizes like iPhone 6s vs iPhone 12 Pro sizes)

- Which aspect of the app to show first (order of the unique selling propositions)

- Design style and colors

- Captions and call to actions on top of the screenshots

- Layout (portrait vs landscape)

- Look and feel (think “busy” versus “minimalist”)

- Screenshots, specifically individual screenshots or composite images

What to A/B Test?

Now it’s time to get serious!

Which aspects of your campaign do you want to test? Here are a few possibilities:

Test Components

You are going live with an ad or app store screenshot update – should it be red or green? Should the title say “learn more” or “sign up”? These are aspects that can prove crucial, and you can try two versions and see which works best. However – as written before – make sure to only test one component at a time. If you are not sure about several aspects you can run multiple tests, but not simultaneously.

Age groups

Is your app better for kids, teens, millennials or older adults? You can see which age group reacts better to it through A/B testing. This aspect can also give you deeper insights into your app, and not only to the specific ads, app page or screenshots you’re testing. Don’t forget to find appropriate age groups – don’t advertise an app for baby food to pensioners.

Location – States/ Countries

Who responds better to your app – Americans or Australians? East Coast or Midwest? You can test this simply by targeting two groups who live in different places and see who reacts better. Note: in this scenario you need to send the same exact variations to both groups.

A/B Testing on Facebook

So, you’ve decided what you want to test, how you want to test it, created your ads and everything is ready. Now what? Facebook offers many tools to help with A/B testing, also known as Split Tests. Each tool is flexible and can be used wisely and differently for any app, taking into consideration time, budget and more.

One example can be found in a past campaign we ran, in which we tested the buttons. While the difference between “sign up” vs “learn more” may seem small and insignificant, the results were conclusive – one ad worked better than the other, and by far. Now, that doesn’t mean the other ad was wrong, just that for this specific ad of that exact product – one CTA worked better. That’s the importance of split testing.

Here are a couple of tools we regularly use when A/B testing on Facebook:

Auto/Manual Bid

Ever wondered which method delivers the best results? Facebook bid types allows you to test different methods of optimization. This tool enables you to decide if you want Facebook to run your ads automatically, or if you prefer to manually control them.

Lookalike Audience

With this advanced tool by Facebook, you can target people who are more likely to install your app. Facebook creates a broad list of potential users, based on similar characteristics to current users, and allows you to adjust the exposure percentage, i.e. how wide/narrow your targeted audience will be. You can try different exposure rates and determine which works best for you.

While the concept of A/B testing exists in digital ad marketing for many years, it took longer before Facebook created a simple tool for advertisers to run experiments conveniently with what they call Split Testing.

Testing For Success

Where testing really becomes effective, is when it is done continuously. By making continuous testing part of your ASO strategy’s DNA, you are sure to see results.

For more app store testing strategies, and for improving your app’s performance in general, get in touch with us. We’re the experts trusted by global brands to deliver the highest performance for their mobile apps, and we’d love to help you too.

Google Play Experiments

For Google Play A/B testing, Google Play Experiments (GPE) allows you to test several predefined assets of an app listing at one time. Each variation appears before a certain percentage of live users. It provides insights for free, whereas tools such as SplitMetrics and StoreMaven come at a high price.

This A/B testing Android edition, GPE, allows you to see:

- The number of variations being shown to a percentage of users

- How long the experiment ran for

- The type of experiment

- How well each experiment performed against the others (e.g. how many installs each gained, how well they performed compared to existing version)

- The overall results

Remember, Google Play Experiments should run for at least one full week because weekday and weekend traffic can differ and the results must be meaningful.

iOS A/B Testing

Although A/B testing for iOS is currently unavailable, this will all change when iOS 15 comes into the picture shortly. App Store A/B testing will be possible as the App Store becomes tailor-made for testing, with three variations of screenshots able to be tested against the control.

Firstly, the App Store’s new advanced features allow for one default page for organic traffic and three slots for testing pages against the default.

All organic traffic will be set to a default page, much like before. However, we now have the option to test organic traffic with different calls to action, and different design sets.

You can read more about the Apple A/B testing in our iOS 15 breakdown here. You’ll learn how to do A/B testing for iOS when it comes with iOS 15.

Our ASO experts and Marketing specialists are here to help you achieve success.

FAQs

A/B testing simply means that you create two versions of something where each one is slightly different and test them against each other with an audience sample.

Currently, no, but with the impending arrival of iOS 15, it will be a concept introduced to the App Store where marketers will be able to test different versions in the App Store itself – previously only possible on third-party tools.

You can use Google Play Experiments. GPE allows you to test several predefined assets of an app listing at one time. Each variation appears before a certain percentage of live users. It provides insights for free.